Archive for February, 2015

Null hypothesis significance testing procedure (NHTSP) psyched out

My colleague Brooks Henderson alerted me to this new policy by the editors of the Basic and Applied Psychology (BASP) journal to ban the NHSTP. According to the editorial in their Feb 2015 issue, authors must remove all p-values and the like and not refer to “significant” differences. They also banned confidence intervals, which really makes this new policy onerous, in my opinion.

I do see the sense of focusing on effect sizes and allowing the readers, presumably subject matter experts, to judge their importance. However, although they do “encourage the the use of larger sample sizes”, it makes no sense, I feel, to disregard the impact of small studies on the uncertainty of the results.

Blaming the misuse of NHTSP and p-values in particular for bad science is like letting a bad guy go by saying the gun is at fault.

Are dogs right-pawed or left?

Last week I judged a number of entrants in a 7th grade science fair. The one I liked the best investigated a number of dogs to see which paw they favored. This depended on which hand the student held out. When he held out his left hand, all dogs offered up their right paw. But when he held out his right hand, half of the canines shook it with their left paw. I conclude from this that far more dogs are lefties than humans, who favor their right hand by a ratio of 9-to-1. Based on what I read here in the Washington Post and see published on the internet, my guess is that dogs are split 50/50 left versus right. The same may be true for cats, although they might be slightly more likely to right-pawed according a study noted in the Post article.

Just for fun, test your pet by putting a snack just barely within their reach. Which paw do they put out? Make a note. Do it again a number of times. If you see what seems to be a significant bias to left or right, let me know.

Lightening up the load on birds and bees

My former neighbor Phil–a bee-keeper–told me he loaded up too many hives in his truck on a run to California and it went over-weight for the regulations on the road home. However, Phil beat the highway inspectors by banging on the side with a hammer as he drove onto the scale. The bees flew up in the air and took down the measurement just enough for a pass–avoiding a hefty fine.

I always wondered if Phil was ‘bee-essing’ me, but a recent study by a Stanford scientist, reported here by NewScientist, indicates that this trick might be bang on. The only catches are that the flyers (in this case Pacific parrotlets, must flap in synch and the weight must be taken on the upstroke. This whole idea would backfire badly on the downstroke when the weight of the flyers comes back double.

Bee-leave it or not.

Picking on P in these times of measles

Posted by mark in science, Uncategorized on February 3, 2015

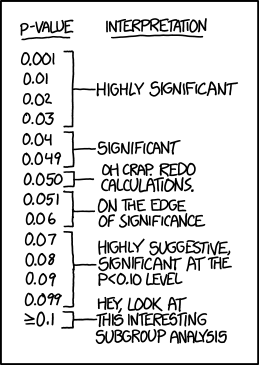

Randall Munroe takes a poke at over-valuers of p in this XKCD cartoon

Nature weighed in with their shots against scientists who misuse P values in this February 2014 article by statistics professor Regina Nuzzo. She bemoans the data dredgers who come up with attention-getting counterintuitive results using the widely-accepted 0.05 P filter on long-shot hypotheses. A prime example is the finding by three University of Virginia finding that moderates literally perceived the shades of gray more accurately than extremists on the left and right (P=0.01). As they admirably admitted in this follow up report on Restructuring Incentives and Practices to Promote Truth Over Publishability, this controversial effect evaporated upon replication. This chart on probable cause reveals that these significance chasers produce results with a false-positive rate of near 90%!

Nuzzo lays out a number of proposals to put a damper on overly-confident reports on purported scientific studies. I like the preregistered replication standard developed by Andrew Gelman of Columbia University, which he noted in this article on The Statistical Crisis in Science in the November-December issue of American Scientist. This leaves scientists free to pursue potential breakthroughs at early stages when data remain sketchy, while subjecting them to rigorous standards further on—prior to publication.

“The irony is that when UK statistician Ronald Fisher introduced the P value in the 1920s, he did not mean it to be a definitive test. He intended it simply as an informal way to judge whether evidence was significant in the old-fashioned sense: worthy of a second look.”