Archive for category science

Believe it or not–sweet statistics prove that you can lose weight by eating chocolate

A very happy lady munching on a huge candy bar caught my eye in The Times of India on Friday, May 25. Not the lady—the chocolate.

A very happy lady munching on a huge candy bar caught my eye in The Times of India on Friday, May 25. Not the lady—the chocolate.

After tasting a variety of delectable darks from a chocolatier in Belgium many years ago, I became hooked. However, I never imagined this addiction would provide a side benefit of weight loss. It turns out that a clinical trial set up by journalist John Bohannon and two colleagues came up with this finding and showed it to be statistically significant. This made headlines worldwide.

Unfortunately, at least so far as I’m concerned, the whole study was a hoax based on deliberate application of junk science done to expose phony claims made by the diet industry.

It turns out to be very easy to generate false positive results that favor a dietary supplement. Simply measure a large number of things on a small group of people. Something surely will emerge that out of this context tests significantly significant. What this will be, whether a reduction in blood pressure, or loss in weight, etc., is completely random.

Read the whole amazing story here.

My thinking is while Bohannan’s study did not prove that eating chocolate leads to weight loss, the subjects did in fact shed pounds faster than the controls. That is good enough for me. Any other studies showing just the opposite results have become irrelevant now—I will pay no attention to them.

Now, having returned from my travel to India, I am going back to dip into my horde of dark chocolate.

Null hypothesis significance testing procedure (NHTSP) psyched out

My colleague Brooks Henderson alerted me to this new policy by the editors of the Basic and Applied Psychology (BASP) journal to ban the NHSTP. According to the editorial in their Feb 2015 issue, authors must remove all p-values and the like and not refer to “significant” differences. They also banned confidence intervals, which really makes this new policy onerous, in my opinion.

I do see the sense of focusing on effect sizes and allowing the readers, presumably subject matter experts, to judge their importance. However, although they do “encourage the the use of larger sample sizes”, it makes no sense, I feel, to disregard the impact of small studies on the uncertainty of the results.

Blaming the misuse of NHTSP and p-values in particular for bad science is like letting a bad guy go by saying the gun is at fault.

Picking on P in these times of measles

Posted by mark in science, Uncategorized on February 3, 2015

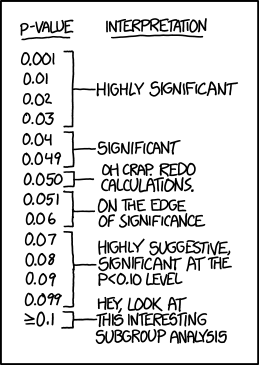

Randall Munroe takes a poke at over-valuers of p in this XKCD cartoon

Nature weighed in with their shots against scientists who misuse P values in this February 2014 article by statistics professor Regina Nuzzo. She bemoans the data dredgers who come up with attention-getting counterintuitive results using the widely-accepted 0.05 P filter on long-shot hypotheses. A prime example is the finding by three University of Virginia finding that moderates literally perceived the shades of gray more accurately than extremists on the left and right (P=0.01). As they admirably admitted in this follow up report on Restructuring Incentives and Practices to Promote Truth Over Publishability, this controversial effect evaporated upon replication. This chart on probable cause reveals that these significance chasers produce results with a false-positive rate of near 90%!

Nuzzo lays out a number of proposals to put a damper on overly-confident reports on purported scientific studies. I like the preregistered replication standard developed by Andrew Gelman of Columbia University, which he noted in this article on The Statistical Crisis in Science in the November-December issue of American Scientist. This leaves scientists free to pursue potential breakthroughs at early stages when data remain sketchy, while subjecting them to rigorous standards further on—prior to publication.

“The irony is that when UK statistician Ronald Fisher introduced the P value in the 1920s, he did not mean it to be a definitive test. He intended it simply as an informal way to judge whether evidence was significant in the old-fashioned sense: worthy of a second look.”

The best accidental inventions of all time

I learned from my latest issue of Chemical and Engineering News that Stanley Stookey of Corning Glass Works died last month at age 99. In 1952 he mistakenly heated an alumino-silicate glass to 900 degrees C meaning only to top out at 600. After much cursing, according to the CEN story, Stookey found that instead of the molten mess expected, the material crystallized into a new type of material called a glass ceramic that proved to be “harder than carbon steel yet lighter than aluminum—shatterproof.”

Being in the business of planned experimentation it always amazes me to come across stories like this of serendipitous science. Obviously chance favors the prepared mind because most of the momentous discoveries are made by world-class chemists such as Stookey and others of his kind in the fields of physics and so forth.

I am a huge fan of 3M Post-It® Notes, not only due to their incredible usefulness, but also because it delights me to think of my fellow Minnesotan Art Fry coming up these by accident. For a list including him and a dozen other experts in their field who made the most of mishaps into inventions see 13 Accidental Inventions That Changed The World by Drake Baer of Business Insider. The one I like best is George Crum (great surname for a chef!) who reacted to customer complaining about his French fries by slicing them into ridiculously thin and hard-backed pieces. Never mind that it probably was his sister Katie who made the accidental discovery according to this Snopes investigation. Either way this works out to be a delicious story.

My advice to our clients is to keep a close watch for any strange results that crop up as statistically deviant in the course of a designed experiment. They may turn out to be really Crummy!

The rarest of birds—a reproducible result from a scientific study

Posted by mark in science, Uncategorized on February 3, 2014

In The New York Times new column Raw Data, science writer George Johnson laments experimenters

“ways of unknowingly smuggling one’s expectations into the results, like a message coaxed from a Ouija board.”

– Science Times, 1/21/14

This, of course, leads to irreproducible findings.

As a case in point, only 6 of 53 landmark papers about cancer found support in follow up studies, even with the help of the original scientists working in their own labs, according to an article in the Challenges in Irreproducible Research archive of Nature cited by Johnson.

That is discouraging but I am not surprised. I feel fairly sure that the any assertions of import get filtered very rigorously until only ones that reproduce reliably make it through.

The trick is to remain extremely skeptical of initial reports, especially those that get trumpeted and reverberate around the popular press and the internet. Evidently it is human nature to then presume that when an assertion is repeated often enough then it must be true, even though it has not yet been reproduced. Saying it’s so does not make it so.

Kids & Science

Posted by mark in pop, science, Uncategorized on June 23, 2013

I am heartened to hear of great work being done by current and former colleagues to get K-12 kids involved in Science, Technology, Engineering, and Mathematics (STEM). For example, Columbia Academy, a middle school (grades 6-8) in Columbia Heights (just north of Minneapolis), held an Engineering and Science Fair last month where two of our consultants, Pat Whitcomb and Brooks Henderson, joined a score of other professional engineers who reviewed student projects. Winners will present their projects this summer at the University of Minnesota’s STEM Colloquium.

Also, I ran across a fellow I worked with at General Mills years ago who volunteers his time to teach middle-schoolers around the Twin Cities an appreciation for chemistry. He makes use of the American Chemical Society (ACS) “Kids & Chemistry” program, which offers complete instructions and worksheets for many great experiments at middle-school level. Follow this link to discover:

– Chemistry’s Rainbow: “Interpret color changes like a scientist as you create acid and base solutions, neutralize them, and observe a colorful chemical reaction.”

– Jiggle Gels: “Measure with purpose and cause exciting physical changes as you investigate the baby diaper polymer,* place a super-absorbing dinosaur toy in water, and make slime.”

– What’s New, CO2? “Combine chemicals and explore the invisible gas produced to discover how self-inflating balloons work.”

– Several other intriguing activities contributed by ACS members.

Kudos to all scientists, engineers, mathematician/statisticians who are engaging kids in STEM!

*(The super-slurpers invented by the diaper chemists really are quite amazing as I’ve learned from semi-quantitative measurements of weight before and after soakings by my grandson. Thank goodness! Check out this video by “Professor Bunsen”, which includes a trick to recover the liquids that I am not going to try.)

Brain scientists flunk statistical standards for power

Last week The Scientist reported that “Bad Stats Plague Neuroscience”. According to researchers who dissected 730 studies published in 2011, neuroscientists pressed ahead with findings on the basis of only 8 percent median statistical power. This falls woefully short of the 80 percent power that statistician advise for experimental work. It seems that the pressure to publish overwhelms the need to run enough tests for detecting important effects.

“In many cases, we’re more incentivized to be productive than to be right.”

– Marcus Munafo, University of Bristol, UK

Only 14 percent of biomedical results are wrong, after all–Is this comforting?

Posted by mark in science, Uncategorized on February 2, 2013

The Scientist reports here that new mathematical studies refute previous findings that most current published medical research findings are false due to small study sizes and bias. I suppose–considering the original assertion of “most” announced discoveries being wrong–we can literally live with a false positive rate of ‘only’ 14% for findings that relate ultimately to our well being. But the best advice is:

It is still important to report estimates and confidence intervals in addition to or instead of p-values when possible so that both statistical and scientific significance can be judged by readers.

– Leah R. Jager, Jeffrey T. Leek (“Empirical estimates suggest most published medical research is true”)

The amazing persistence of biased scientific results—Popeye’s spinach found fraudulent

Posted by mark in science, Uncategorized on December 5, 2012

I recently completed a series of webinars on using graphical diagnostics to deal with bad experimental data.* The first thing I focused on was avoidance of confirmation bias – hearing what you want to hear, for example in the persistence of the possibilities of cold fusion. See more cases of confirmation bias in this detailing by Peter Bowditch in Australasian Science.

I came across another interesting example of the persistence of wished-for results in a review** of Samuel Arbesman’s new book on The Half-Life of Facts. It turns out that spinach really does not delivery the amount of iron that my mother always believed would make it worth us eating this horrible food. She was a child of the 1930’s, at which time it was widely believed that the edible (?) plant contained 35 milligrams of iron, a tremendous concentration, per serving. However, the actual value is 3.5 mg—the chemist who first analyzed it misplaced the decimal point when transcribing the data from his notebook in 1870! In 1937 this error was finally corrected, but my mom never got the memo, unfortunately for me and my six younger siblings. ; )

*“Real-Life DOE” presentation, posted here

** The Scientific Blind Spot by David A. Shaywitz in the 11/19/12 issue of Wall Street Journal

A strange pink elephant — the Higgs boson

Posted by hank in science, Uncategorized on July 9, 2012

In our business we focus a lot of energy to convince experimenters they must conduct enough runs to develop the statistical power needed for detecting an effect of interest. What amazed me about the recent discovery of the Higgs boson is the sample size required to see this “strange pink elephant” as it’s described in the embedded explanatory video cartoon. The boffins of CERN took 40 million measurements per second for 20 years. These physics fellows cannot be topped for being persistent, tenacious, dogged and determined. Good for them and, I suppose, us.

“If the particle doesn’t exist, one in 3.5 million is the chance an experiment like the one announced would nevertheless come up with a result appearing to confirm it does exist.”

– Carl Bialik, ‘The Numbers Guy’ for Wall Street Journal explaining in his July 7-8 column the statistical meaning of CERN’s 5 sigma standard of certainty (see How to Be Sure You’ve Found a Higgs Boson).